Configure FAIR Schedular in Spark

By default Apache spark has FIFO (First In First Out) scheduling.

To configure Fair Schedular in Spark 1.1.0, you need to do the following changes -

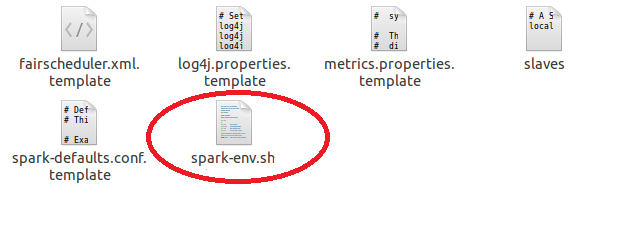

1. In spark home, there is a conf folder. In the conf folder.

To configure Fair Schedular in Spark 1.1.0, you need to do the following changes -

1. In spark home, there is a conf folder. In the conf folder.

2. Open the spark-env.sh and the following lines -

SPARK_JAVA_OPTS="-Dspark.scheduler.mode=FAIR"

SPARK_JAVA_OPTS+="-Dspark.scheduler.allocation.file=$SPARK_HOME_PATH/conf/fairscheduler.xml"

Path of the fair scheduler xml being mentioned.

sample Contents of the fairscheduler.xml

<?xml version="1.0"?>

<allocations>

<pool name="test1">

<schedulingMode>FAIR</schedulingMode>

<weight>1</weight>

<minShare>2</minShare>

</pool>

<pool name="test2">

<schedulingMode>FIFO</schedulingMode>

<weight>2</weight>

<minShare>3</minShare>

</pool>

</allocations>

Each tag in the xml is used to set the following properties in the scheduler -

1 . schedulingMode - It controls the job pool queue. Can be set either FAIR or FIFO. By default, it is FIFO.

2. weight - This controls the pool's share of the cluster relative to other pools. The higher the number, the more priority is given while execution. By default, all pools have weight 1.

3. minShare - Minimum number of Cores that are allocated to a particular task even in a resource hungry environment. By default the value is 0.

Comments

Post a Comment