Configure FAIR Schedular in Spark

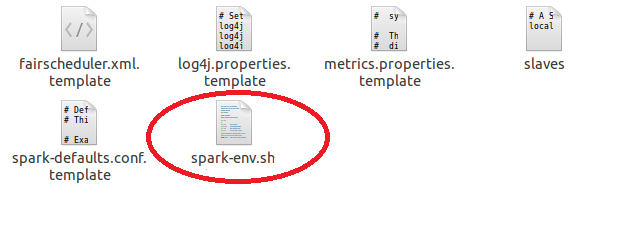

By default Apache spark has FIFO (First In First Out) scheduling. To configure Fair Schedular in Spark 1.1.0, you need to do the following changes - 1. In spark home, there is a conf folder. In the conf folder. 2. Open the spark-env.sh and the following lines - SPARK_JAVA_OPTS="-Dspark.scheduler.mode=FAIR" SPARK_JAVA_OPTS+="-Dspark.scheduler.allocation.file=$SPARK_HOME_PATH/conf/fairscheduler.xml" Path of the fair scheduler xml being mentioned. sample Contents of the fairscheduler.xml <?xml version="1.0"?> <allocations> <pool name= "test1" > <schedulingMode> FAIR </schedulingMode> <weight> 1 </weight> <minShare> 2 </minShare> </pool> <pool name= "test2" > <schedulingMode> FIFO </schedulingMode> <weight> 2 </weight> <minShare> 3 </minShare> </pool> ...